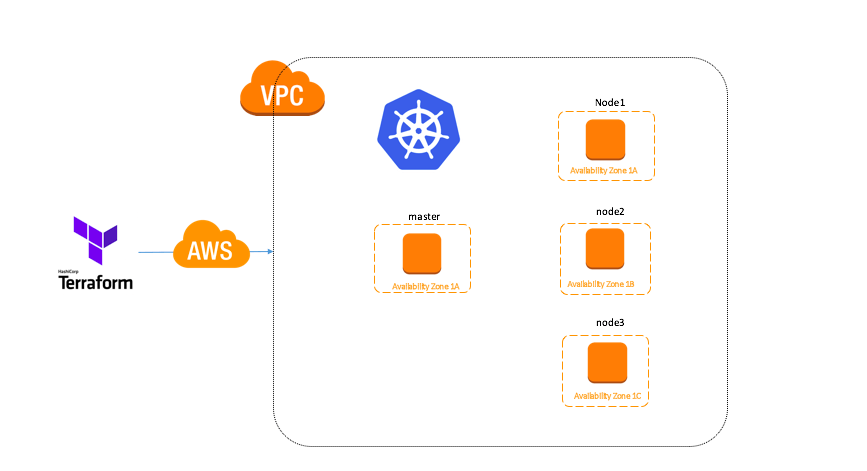

This example Project will help you to create KOPs cluster on multiple AZ but limited to the Single region.

Assume that you have AWS CLI installed and IAM user configured.

The IAM user to create the Kubernetes cluster must have the following permissions:

AmazonEC2FullAccessAmazonRoute53FullAccessAmazonS3FullAccessIAMFullAccessAmazonVPCFullAccess

Pre-requirements:

- Terraform (Note you need to install 0.11.7 Version) https://www.terraform.io/downloads.html

- Install kops (WE ARE USING kops 1.8.1 for now) https://github.com/kubernetes/kops

For Mac

brew update && brew install kops

OR from GITHUB

curl -Lo kops https://github.com/kubernetes/kops/releases/download/1.8.1/kops-darwin-amd64

chmod +x ./kops

sudo mv ./kops /usr/local/bin/

For Linux

wget -O kops https://github.com/kubernetes/kops/releases/download/1.8.1/kops-linux-amd64

chmod +x ./kops

sudo mv ./kops /usr/local/bin/

- Install kubectl https://kubernetes.io/docs/tasks/tools/install-kubectl/

For Mac

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.8.11/bin/darwin/amd64/kubectl

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

For Ubuntu

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.8.11/bin/linux/amd64/kubectl

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

Getting started

git clone [email protected]:jaganthoutam/ubuntu-k8s-kops.git

cd ubunut-k8s-kops/

Replace with your public zone name

vim example/variables.tf

variable "domain_name" {

default = "k8s.thoutam.com"

}

Edit cluster details. node_asg_desired,instance_key_name etc..

vim example/kops_clusters.tf

**** Edit module according to insfra name *****

module "staging" {

source = "../module"

source = "./"

kubernetes_version = "1.8.11"

sg_allow_ssh = "${aws_security_group.allow_ssh.id}"

sg_allow_http_s = "${aws_security_group.allow_http.id}"

cluster_name = "staging"

cluster_fqdn = "staging.${aws_route53_zone.k8s_zone.name}"

route53_zone_id = "${aws_route53_zone.k8s_zone.id}"

kops_s3_bucket_arn = "${aws_s3_bucket.kops.arn}"

kops_s3_bucket_id = "${aws_s3_bucket.kops.id}"

vpc_id = "${aws_vpc.main_vpc.id}"

instance_key_name = "${var.key_name}"

node_asg_desired = 3

node_asg_min = 3

node_asg_max = 3

master_instance_type = "t2.medium"

node_instance_type = "m4.xlarge"

internet_gateway_id = "${aws_internet_gateway.public.id}"

public_subnet_cidr_blocks = ["${local.staging_public_subnet_cidr_blocks}"]

kops_dns_mode = "private"

}

If you want Force single master. (Can be used when a master per AZ is not required or if running in a region with only 2 AZs).

vim module/variables.tf

**** force_single_master should be true if you want single master ****

variable "force_single_master" {

default = true

}

ALl good now. You can run Terraform plan to see if you get any errors. If everything clean just run “terraform apply” to build cluster.

cd example

terrafrom plan

(Output something like below)

......

......

+ module.staging.null_resource.delete_tf_files

id: <computed>

Plan: 6 to add, 0 to change, 1 to destroy.

------------------------------------------------------------------------

......

......

MASTER_ELB_CLUSTER1=$(terraform state show module.staging.aws_elb.master | grep dns_name | cut -f2 -d= | xargs)

kubectl config set-cluster staging.k8s.thoutam.com --insecure-skip-tls-verify=true --server=https://$MASTER_ELB_CLUSTER1

And then test:

kubectl cluster-info

Kubernetes master is running at https://staging-master-999999999.eu-west-1.elb.amazonaws.com

KubeDNS is running at https://staging-master-999999999.eu-west-1.elb.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns/proxy

kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-20-25-99.eu-west-1.compute.internal Ready master 9m v1.8.11

ip-172-20-26-11.eu-west-1.compute.internal Ready node 3m v1.8.11

ip-172-20-26-209.eu-west-1.compute.internal Ready node 27s v1.8.11

ip-172-20-27-107.eu-west-1.compute.internal Ready node 2m v1.8.11

Credits: Original code is taken from here.